Projects

Statistical Process Monitoring of Artificial Neural Networks

We all fancy using AI models and brag about our trained neural network with thousands of parameters. Do we, however, know when to stop trusting the current version of the model and revise it? Especially, when the incoming data can be as evil to the model as shown on the right? This project deals with developing a monitoring technique to understand better when something goes wrong with the model. It suits any type of neural networks trained in a supervised manner and assumes no ground truth is available during the deployment.

Online network monitoring

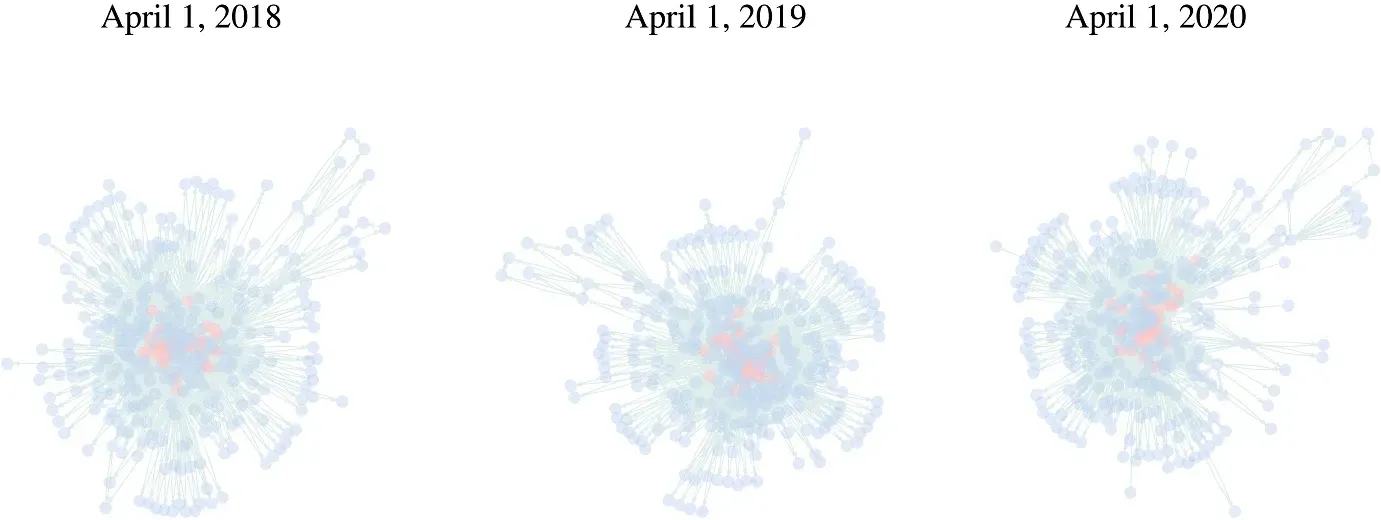

An important problem in network analysis is the detection of anomalous behaviour in real time. This project introduces a network surveillance method bringing together network modelling and statistical process control, allowing us to account for temporal dependence. The effectiveness of the proposed approach is illustrated by monitoring daily flights in the United States to detect anomalous patterns.